What just happened? Did you know that Thursday is World Accessibility Awareness Day? Yes, and I also. But this designer party seems to give companies the perfect opportunity to show how inclusive they are by advertising features that make their products more accessible. Apple celebrates the day by introducing features that expand its growing list of accessibility features.

Although not expected before the end of this year, Apple has a statement Several additions for accessibility settings for Mac, iPhone, iPad, and Apple Watch. While the features are meant to help people with disabilities use Apple devices more easily, some of them are interesting alternatives for those looking for more convenient input methods — especially the new gesture controls for Apple Watches, but more than that.

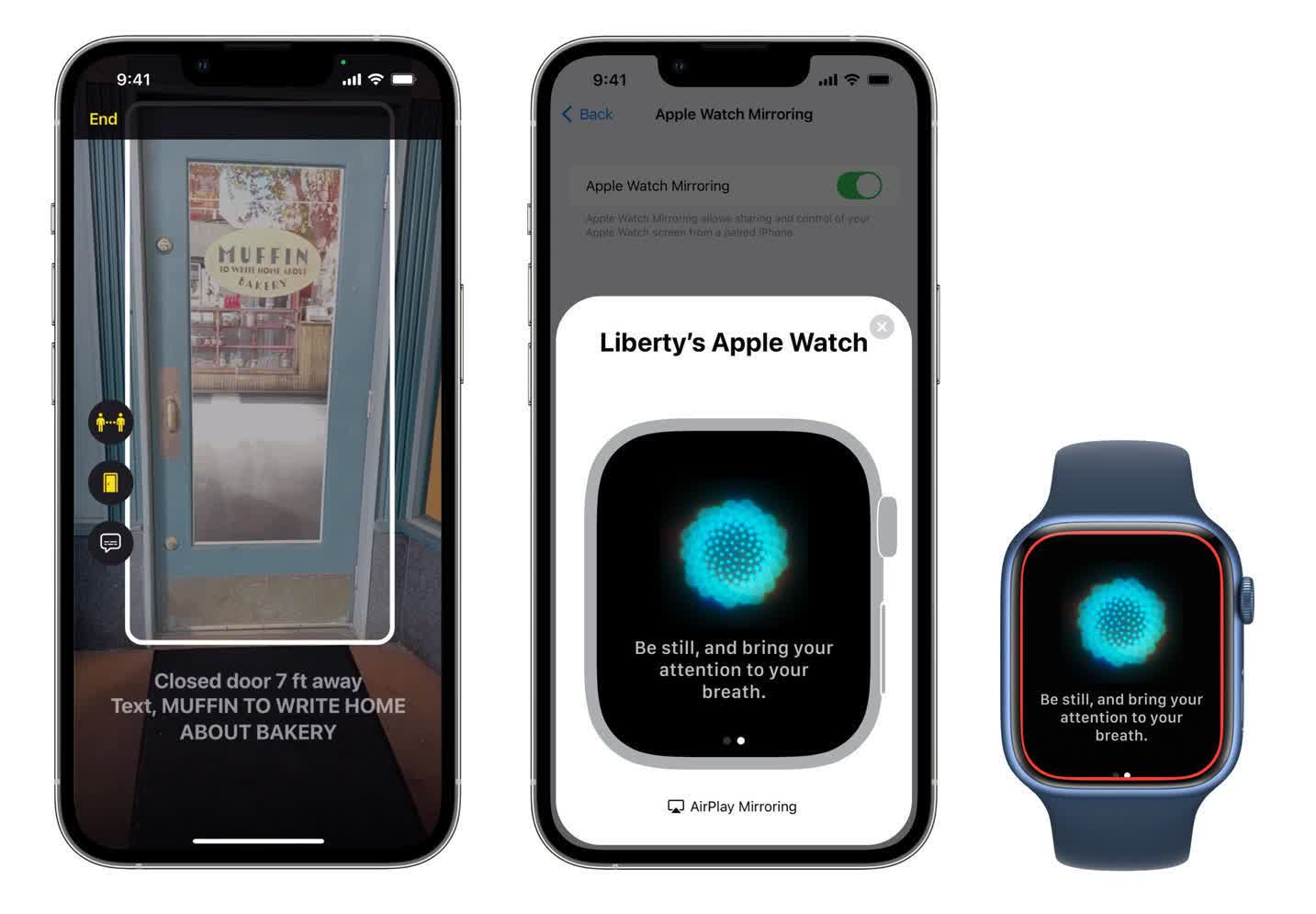

One of the first features revealed is the door detection feature. Door detection is designed for the blind and visually impaired. It uses the camera, LiDAR scanner, and machine learning on the new iPhone and iPad to help people navigate buildings better.

When they arrive at a new location, the feature can tell users where the door is, how far away from it, how to open it – by turning the knob, pulling the knob, and more. It can also read signs and symbols around the door, such as room numbers or accessibility signs.

Next, Apple is developing live captions for the hard of hearing. Live comments aren’t entirely innovative. Android devices have had a similar feature for some time, but now iPhones, iPads, or Macs can have real-time caption overlays on video calls and FaceTime. It can also transcribe sounds around the user.

However, there are two features that make live translation different from Android. One is the ability to add name tags to FaceTime speakers, which makes it easy to keep track of speakers. Moreover, when used on a Mac, it can read written answers in real time. This last feature may be useful for aphasia patients or others who have difficulty speaking. Unfortunately, it will only be available in English when Apple releases the beta version in the US and Canada later this year.

Finally, there are some interesting Apple Watch features. The first is reflection. This setting allows people with motor control issues to operate their Apple Watch without fumbling with the small screen. It syncs with the user’s iPhone using AirPlay to enable various input methods, including voice control, head tracking, and Made for iPhone external switch controls.

Another innovative accessibility feature on the Apple Watch is Quick Actions. These are simple gestures with your fingers, like touching your first finger and your thumb together (pinch) that Apple first introduced last year. The watch will detect these movements as inputs. This year it has improved discovery and added more functionality to the list of things users can control.

For example, a single pinch can go to the next menu item, and a double pinch can go back to the previous item. Answering or rejecting a call while driving with a simple gesture of your hand can be useful even for those without engine control problems. Users can use gestures to dismiss notifications, activate the camera shutter, pause media content in the Now Playing app, and control workouts. There will likely be many more examples, but these are the specific use cases Apple mentioned.

Some additional features will be rolling out later this year, including Buddy Controller, Siri Pause Time, Voice Control Spelling Mode, and Sound Recognition. Could you Read About what they’re doing in a press release for Apple.

“Proud thinker. Tv fanatic. Communicator. Evil student. Food junkie. Passionate coffee geek. Award-winning alcohol advocate.”